Flint¶

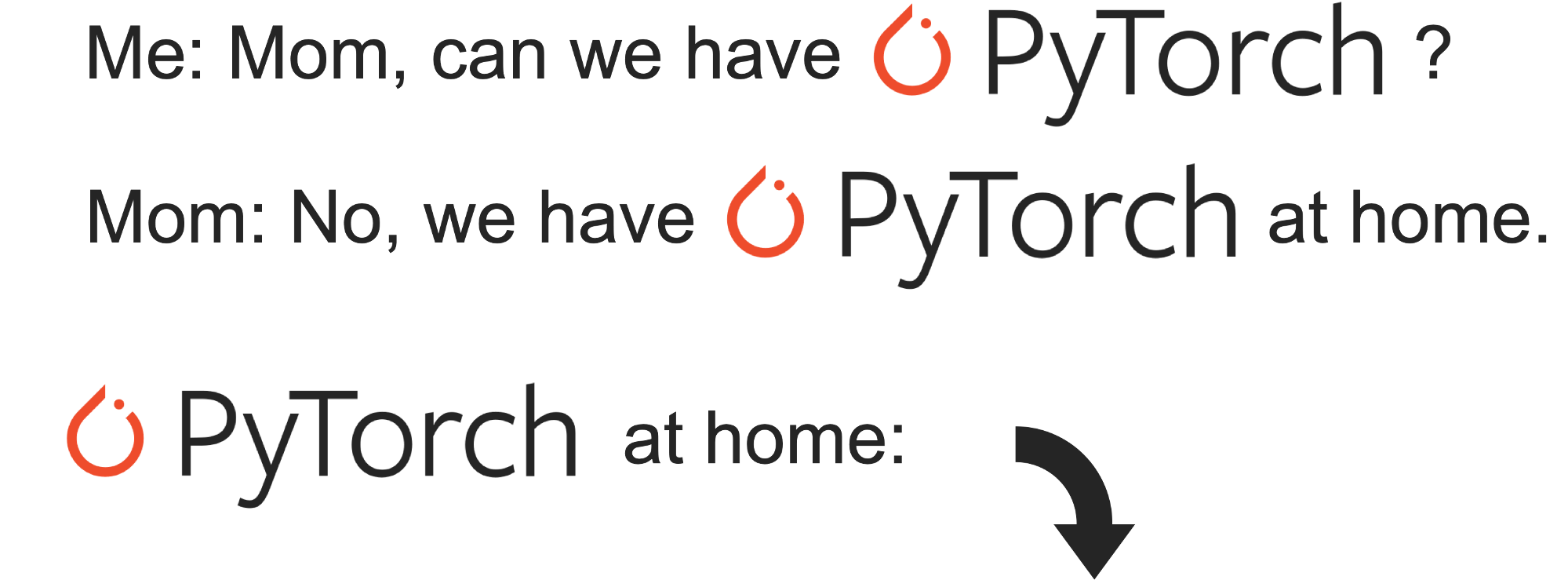

A toy deep learning framework implemented in Numpy from scratch with a PyTorch-like API. I’m trying to make it as clean as possible.

Flint is not as powerful as torch, but it is still able to start a fire.

Installation¶

git clone https://github.com/Renovamen/flint.git

cd flint

python setup.py install

or

pip install git+https://github.com/Renovamen/flint.git --upgrade

API Documentation

Tutorials

Features¶

Core¶

Support autograding on the following operations:

Add

Substract

Negative

Muliply

Divide

Matmul

Power

Natural Logarithm

Exponential

Sum

Max

Softmax

Log Softmax

View

Transpose

Permute

Squeeze

Unsqueeze

Padding

Layers¶

Linear

Convolution (1D / 2D)

MaxPooling (1D / 2D)

Unfold

Flatten

Dropout

Sequential

Identity

Optimizers¶

SGD

Momentum

Adagrad

RMSprop

Adadelta

Adam

Loss Functions¶

Cross Entropy

Negative Log Likelihood

Mean Squared Error

Binary Cross Entropy

Activation Functions¶

ReLU

Sigmoid

Tanh

Leaky ReLU

GELU

Initializers¶

Fill with zeros / ones / other given constants

Uniform / Normal

Xavier (Glorot) uniform / normal (Understanding the Difficulty of Training Deep Feedforward Neural Networks. Xavier Glorot and Yoshua Bengio. AISTATS 2010.)

Kaiming (He) uniform / normal (Delving Deep into Rectifiers: Surpassing Human-level Performance on ImageNet Classification. Kaiming He, et al. ICCV 2015.)

LeCun uniform / normal (Efficient Backprop. Yann LeCun, et al. 1998.)

Others¶

Dataloaders

Acknowledgements¶

Flint is inspired by the following projects: